I was going to write about the latest court decision where a Reagan-appointed judge said the Trump administration's anti-DEI orders are racist and homophobic, true story. Of course, the administration will appeal this decision, but the opinion is blistering; read it here.

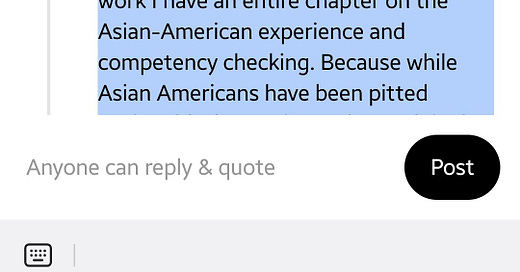

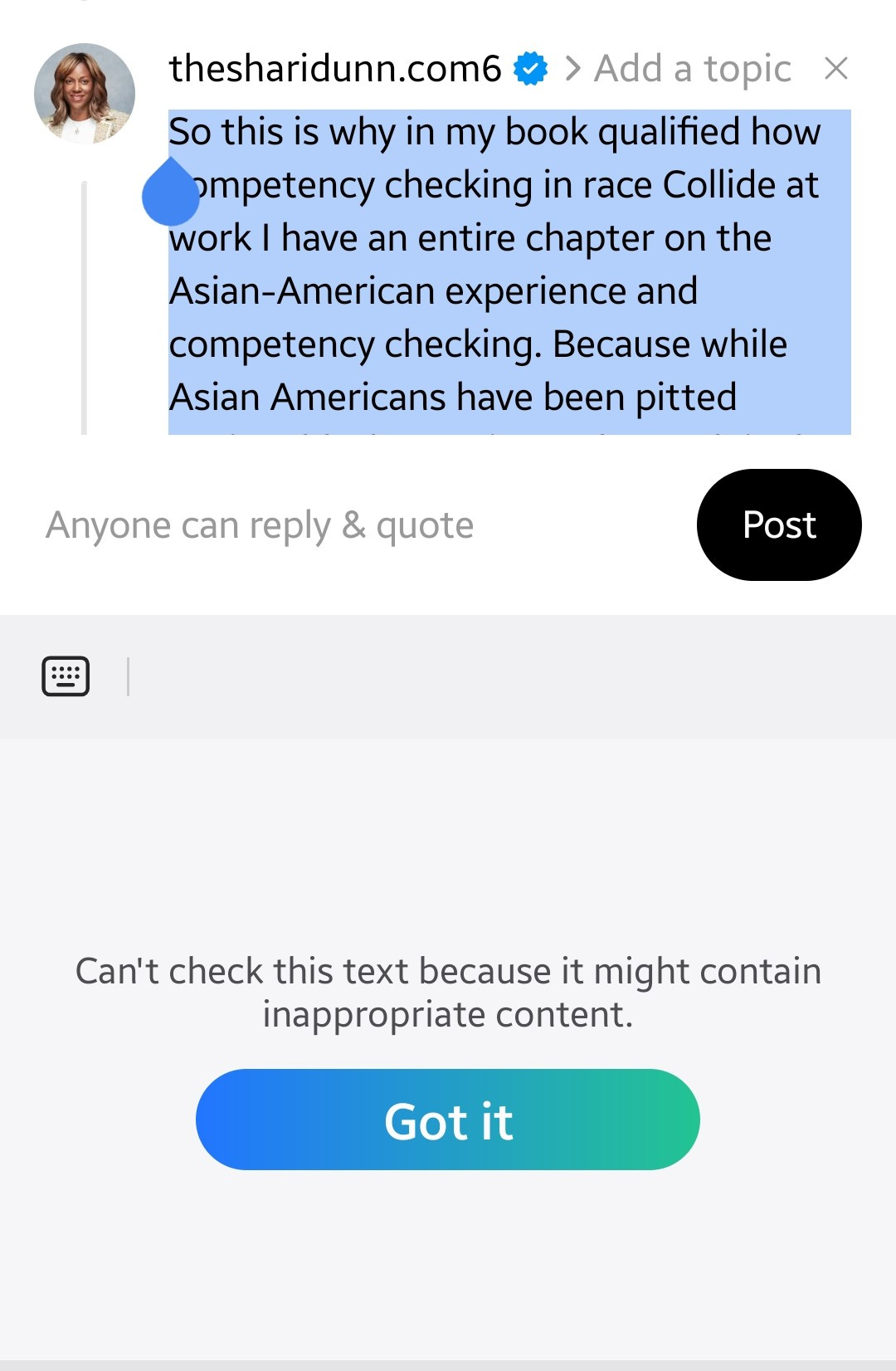

But then this happened. I typed out a post on Threads, something simple.

I was writing about my book, Qualified, specifically the section on the Asian-American experience and competency checking.

The phone’s AI spellcheck refused to review it.

The message?

“Can’t check this text because it might contain inappropriate content.”

Really?

Is inappropriateness in the room with us?

Because what I wrote wasn’t offensive, just factual words.

And yet the system treated it like a threat.

We were promised tools. What we’re getting is quiet censorship.

This is no longer about spelling or assistance.

This is about control.

About platforms deciding without context what’s “appropriate” to say.

🔍 Who decides what qualifies as inappropriate?

🔍 What happens when AI can't tell the difference between hate speech and simple descriptions?

🔍 What happens when discussing race is labeled as dangerous, while erasing the impact of race becomes the default?

Here’s the real issue:

This isn’t neutral. This is design.

And it’s happening more often.

❓ Is it happening to you?

❓ Have you noticed certain words or topics get blocked or flagged?

❓ Do you know others, especially people of color, women, LGBTQ+ folks, who’ve been flagged for saying what needs to be said?

It raises a deeper question:

What voices are being pushed to the margins—not because they’re inappropriate, but because they challenge power?

This isn’t new. And it isn’t accidental.

Just ask:

🗣️ Dr. Timnit Gebru, forced out of Google for speaking up about bias in AI1

🗣️ Joy Buolamwini, who proved that facial recognition fails Black women, but was largely ignored until it became marketable2

🗣️ Countless Black and Brown researchers, thinkers, and creators flagged, shadowbanned, or silenced—not for breaking rules, but for telling truths

AI doesn’t have context. It doesn’t understand nuance.

But it’s being used to police language, tone, and visibility.

So this post is really a question:

👉 Is this happening across AI models and tools?

👉 Who gets to decide what’s safe, what’s neutral, and what’s professional?

👉 How are we pushing back before these filters become the gatekeepers of public conversation?

Because if the people building these systems don’t look like us, speak like us, or center voices like ours, then we’re not users of AI, we’re its targets.

#AIethics #AlgorithmicBias #TechCensorship #QualifiedAtTheIntersection #SpeechRights #WhoGetsToSpeak #JoyBuolamwini #TimnitGebru #RaceAndTechnology #AntiRacism #DigitalPower

https://www.nytimes.com/2020/12/03/technology/google-researcher-timnit-gebru.html

https://www.npr.org/2023/11/28/1215529902/unmasking-ai-facial-recognition-technology-joy-buolamwini

Share this post